Date Published August 7, 2018 - Last Updated December 13, 2018

A service management roadmap is primarily useful for coordinating and implementing service improvements. A scorecard-based service management roadmap is one way to measure and then manage your continuous service improvement (CSI) activities. A scorecard-based approach uses a holistic source of inputs for CSI efforts and creates a very versatile output.

I recommend using Microsoft Excel, or any other spreadsheet tool, to create your scorecard. The flexibility and availability of support for the functions make this approach ideal for the iterative development of your scorecard. You can also create your roadmap with a purpose-built project management software tool. I recommend a five-step process for building a scorecard-based roadmap:

- Select or create a scorecard and a scoring method

- Define your goal (or target) scores and assign weight to the scorecard elements

- Assess the current performance level

- Analyze the current performance levels and prioritize the results

- Build your roadmap by defining and sequencing initiatives to achieve the goal (or target) levels

This approach his ideal for service managers and their leaders who want to create a service improvement roadmap to illustrate and guide their improvement efforts.

Select or Create, and Modify a Scorecard

The best way to select a scorecard for roadmapping is to start by determining the right framework to use. Consider the likelihood that there is an existing, widely accepted framework or model that can serve as a basis for your scorecard. It can be useful to leverage a set of benchmarked data as the basis for your scorecard because you can then track the progress of your continuous improvement initiatives against those same benchmarks. I’ll list some of the recommended frameworks and maturity models for support teams.

HDI Support Center Standard (HDI SCS). The HDI Support Center Standard is a mature and well-thought-out resource to help identify best practices for your support center. The HDI SCS is best employed as a scorecard for help desks, support centers, or other frontline support teams. Unmodified, it has less relevance for next-level support and engineering teams or development teams. It represents the unique perspective of the support center” in the form of a rubric. That is to say, it has a built-in scoring method. The HDI SCS is extremely well-balanced because it accounts for the enabling factors and results of service excellence, including leadership and personnel management, among others.

ITIL Framework. The Information Technology Infrastructure Library (ITIL) framework describes best practices for the provisioning of IT services. The ITIL framework can be employed, as a whole or in part, to use as a scorecard for any service-oriented information technology teams, including next-level support and engineering teams. Be aware that the ITIL framework is heavily focused on processes. Using it to achieve well-rounded improvements requires your scoring mechanisms to account for other factors such as strategy, personnel management, tools, and culture.

Periodic Service Satisfaction Surveys. Many support teams employ transactional customer satisfaction surveys. These are short questionnaires sent to end users that are triggered by a service transaction, usually tracked in an incident or request ticket.

Periodic service satisfaction surveys are less commonly employed. They are conducted periodically (e.g., annually) and are sent with context to decision-making stakeholders as well as end users. Service satisfaction surveys measure how well a service organization’s results are aligned with the business needs of those receiving the service.

Initial results from a first service satisfaction survey become the baseline of your scorecard. The results from follow-on survey are used to track the results of your improvement initiatives.

To be useful for roadmapping, a scorecard must have these elements:

-

Elements (or Factors) to Score. These are the elements of the scorecard that you apply your scores to. They are what you are measuring. In the HDI SCS, these are activities in categories of Enablers and Results. For an ITIL-based scorecard, these are the ITIL processes, or possibly the ITIL process steps. For a periodic survey, these would be the survey questions.

-

Scoring Method. This is the set of descriptive definitions for different levels of performance related to the elements of the scorecard, with associated numerical value for different levels. This is how you are measuring. This can be a scale, such as 1 to 5, or it can be evaluative (poor, ok, good, great). But you must have numerical values associated with the different levels so that you can use mathematical functions for weighting and prioritizing.

-

Goals or Target Levels. This provides a way to set the level of performance you are trying to achieve for each element or factor that you score. Achieving the highest level of maturity for every element on your scorecard may not be affordable or may not be suitable for what the stakeholders want. The difference between your current level of performance and each goal or target level is called a gap. Gaps are one useful way to prioritize improvement plans.For survey-based scorecards, there are alternatives to setting goals or target levels that are directly based on the scoring method. You can measure the percentage of favorable responses or ask respondents to assign a relative importance rating with each survey rating. The difference between the percentage of favorable responses and a target level, or the difference between a survey rating and its associated relative importance, are also gaps.

-

Weighting Mechanism. In addition to being able to score each element, a good scorecard provides a way to assign more or less importance to each element or to categories of elements.

-

Initiatives for Service Improvements Associated with Specific Elements. Each element or factor, with its performance level, gap, and weighting, provides an opportunity to link service improvement initiatives to the scorecard. It is these initiatives that form the actions contained on your roadmap.

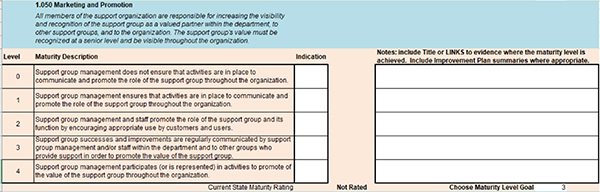

When your completed scorecard is combined with comparative data elements such as organization goals, gap calculations, and weight factors, it becomes a tool for both assessing and prioritizing areas that need improvement. You build your roadmap by linking initiatives for service improvements to the gaps and priorities. The first example below shows a single scorecard element (from the HDI SCS). The elements (or factors) to score have a definition of what the element is, with descriptions for each maturity level and a space for notes or links to evidence that supports the rating.

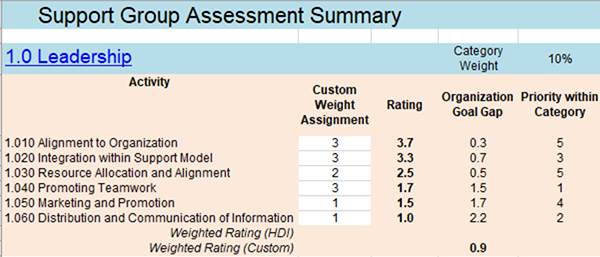

The next example shows an excerpt from a scorecard summary section that is used for setting custom weightings for the HDI SCS Leadership category.

The HDI Support Center Standard has a built-in scoring method that includes detailed descriptions of different levels of maturity for each of its activities. The ITIL body of knowledge includes a Process Maturity Framework, or you can adopt the very similar Capability Maturity Model Integration. The CMMI maturity framework is well-rounded and can also be applied to strategies and models that come from outside of your support organization.

Scoring methods for survey-based scorecards rely on combining the evaluative results from the survey with either target scores or assessments of relative importance. Combining target scores or relative importance with survey results facilitates a gap analysis technique that helps prioritize improvement initiatives.

Carefully choose your scoring method because as I like to say, “How you measure it is how you manage it.” If possible, pick a well-regarded scoring method that has already been accepted within your organization or one that is likely to be readily accepted by others.

How you measure it is how you manage it.

Define Your Goals and Assign Weights

Once you have chosen a scoring method, it is simple to record your goals for each scorecard element. As noted above, this provides a way to set the level of performance you are trying to achieve for each element or factor that you score.

Weighting the scorecard creates a way to make different parts of the scorecard count more than others. Adjusting the weighting of your scorecard periodically is a good way to keep it aligned with changing priorities over time, as opposed to choosing a different scorecard when priorities change.

Assess the Current Performance Level

If you are using a periodic survey as your framework, the survey results are your completed assessment, and you can move on to the next step. If you are using the HDI SCS or ITIL framework, for example, you must perform an assessment of your current performance level.

Your scorecard is an assessment tool. To maximize fairness, validity, and reliability you must apply good practices for conducting assessments. The principles and details of these good practices are outside of the scope of this article. However, to provide a general approach here I recommend that service managers look within your own organization for expertise in conducting assessments (imagine if you called your Internal Audit folks and invited them to help you), study up on good practices to conduct assessments (this is a career-enhancing skill to have), or hire an experienced consultant to perform the assessment in partnership with you. If you want to study up, I recommend Conducting Assessments: Evaluating Programs, Facilities, Agencies and Organizations by Dr. Bob Frost to learn about and apply good practices for conducting assessments.

Service managers must first decide if they are going to perform an internal or an external assessment. External assessments are conducted by a person or team from outside of the organization and when the assessment requires an emphasis on objectivity and credibility. Internal assessments (a.k.a., self-assessments) are performed by a person or team that works for the organization and when the emphasis is on pursuing cost-effective continuous improvement.

If the knowledge, skills, and ability to perform the assessment exist within the organization, an internal assessment is a good way to start. Performing an internal assessment first allows service managers to validate the scorecard elements and the scoring method. This approach also allows the service manager to determine if it is worthwhile to invest in an external assessment.

You will want to train your assessors on the scorecard’s elements, the context of its model or framework, and the scoring method. The internal assessors should also be trained in the principles of performing high quality assessments. To actually do the work of performing an assessment, Dr. Frost outlines these steps:

- Enlist a qualified assessment leader

- Define and scope the assessment, plan the timeline, and identify stakeholder interests

- Enlist qualified assessors

- Specify criteria and standards, build assessment tools

- Train assessor teams

- Prepare for evidence collection

- Collect data/evidence

- Analyze data/evidence (and fact check)

- Evaluate

- Report

- Collect and document lessons-learned

One of the principles of high quality assessments is to separate each stage; establish criteria for your assessment first, then gather evidence, and evaluate the evidence. Developing criteria first helps determine what evidence is required. Premature evidence collection can improperly influence the criteria. Each stage should be monitored by a sponsor-level person supervising the entire assessment.

Capturing evidence for how each scorecard rating was assigned is strongly recommended. For standards- or framework-based scorecards, the evidence should be things like links to documented policies and procedures, or to key performance indicators, or even meeting notes—anything that sheds light on the specific reasons for a specific rating.

While the responses from a survey are a form of evidence reflecting the point of view of the respondents, you may need to investigate the characteristics of the customer’s experience that formed the basis of their response. Reports related to those type of investigations become supporting evidence for the survey results.

Numerical-based evidence is quantitative. Comparison- or attribute-based evidence is qualitative. Both kinds of evidence are important for assessments. Not all quantitative evidence is created equal. And many times, the most fruitful qualitative evidence is unstructured. Therefore, as Dr. Frost notes, all evidence should be analyzed systematically and purposefully.

Analyze Current Performance Levels

Once you’ve collected your ratings and evidence, analyze the current performance levels to understand where the assessment results demonstrate strengths and uncover improvement opportunities. If you’ve used a spreadsheet tool for your scorecard, you will be able to graph, chart, or use conditional formatting to visually summarize your results.

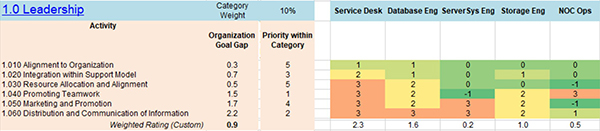

Heat maps are extremely useful as a visual aid for analysis. Ranking that incorporates the identified gaps and the assigned weighting is very useful for prioritizing the areas that need improvement. Please note that priority and sequence are not always the same thing. Analyze the scorecard and derive a prioritized list of scorecard elements to apply your improvement efforts to. Use your experience to plan the sequence of improvement efforts, factoring in effort, impact, and your organization’s ability to undergo changes.

The figure below shows the use of the heat map function in Excel to visually analyze the HDI SCS Leadership category.

Build Your Roadmap

Build your roadmap by defining and sequencing improvement efforts that will achieve the goal (or target) levels. When you have specific areas from your scorecard to target for improvement, it may be a good idea to get input from your peers or from your professional network on changes to make that have a high likelihood of success. The list of improvement efforts and their sequence become the basis of your roadmap.

As noted in this continuous improvement toolkit website, a service improvement roadmap serves the same purpose as a roadmap in your car when you’re trying to drive to a destination; you need to know and understand where you are now, and you need to find the way to go to achieve our target (destination). To gain the improvements, each roadmap item is then broken down into a project or action plan and executed.

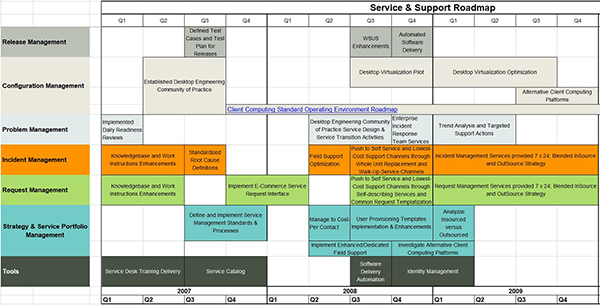

At a minimum, your service improvement roadmap should include a timeline and service improvement initiatives or activities placed along that timeline. The Initiatives or activities can be grouped into categories. For example, by support team, by process, or by level of effort. At a more advanced level, your service improvement roadmap can be augmented with budget information or can identify key stakeholder groups. The example below shows a basic roadmap organized by ITIL process.

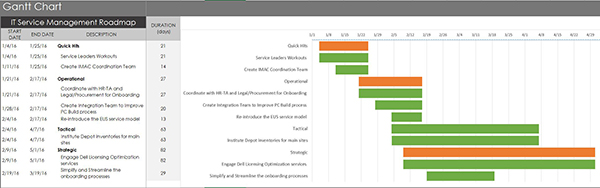

The next example shows a service improvement roadmap depicted as a Gantt chart that is grouped by the complexity of improvement initiative (Quick Hit, Operational, Tactical, Strategic).

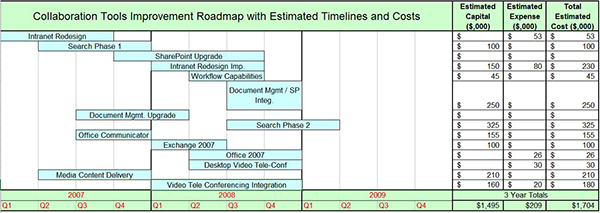

This example shows a service improvement roadmap for Collaboration Tools and includes the names of a series of interrelated projects on a timeline with each project’s estimated budget.

Use Your Service Improvement Roadmap

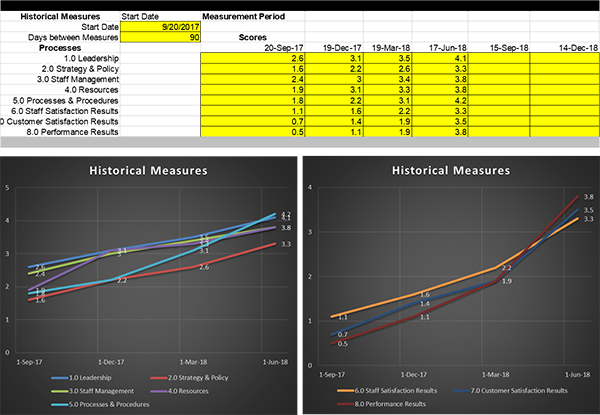

The example below shows the results from a series of measurements over several 90-day periods, with graphs for showing the effect of scorecard results from service improvement initiatives.

Your service management roadmap is primarily useful for coordinating and implementing service improvements. Coordination includes communication both inside and outside your support team. The service improvement changes or initiatives on the map must be planned and executed in detail. Make sure to employ organizational change management practices for your improvement initiatives. Strongly consider employing project and program management techniques as part of coordinating and implementing service improvements.

These kinds of roadmaps are versatile because they can be used as a communications instrument, to track your progress, and as a catalyst for increasing the value delivered by your support organization.

The same scorecard you used to build your service improvement roadmap is also a way to measure the success of your service improvement efforts. In addition to the required scorecard elements noted above, consider adding an historical measures section to your scorecard where you can capture periodic measurements over time. A set of periodic measurements based on reassessments can be used to show progress.

Get your service management content in person at Service Management World!

Join us!

Bill Payne is a results-driven IT leader and an expert in the design and delivery of cost-effective IT solutions that deliver quantifiable business benefits. His more than 30 years of experience at companies such as Pepsi-Cola, Whole Foods Market, and Dell, includes data communications consulting, messaging systems analyst, managing multiple infrastructure support and engineering teams, medical information systems deployment, retail and infrastructure systems management, organizational change management, and IT service management consulting. Leveraging his experience in leading, managing, and executing both technical and organizational transformation projects in numerous industries, Bill currently leads his own service management consulting company. Find him on

LinkedIn

, and follow him on Twitter

@ITSMConsultant

.